Dissertation Defense

Spatio-temporal Neuroimaging of Visual Processing of Human and Robot Actions in Humans

BURCU AYSEN URGEN

Thursday, Sept 17, 2015, 11 am

Cognitive Science Building, Room 003

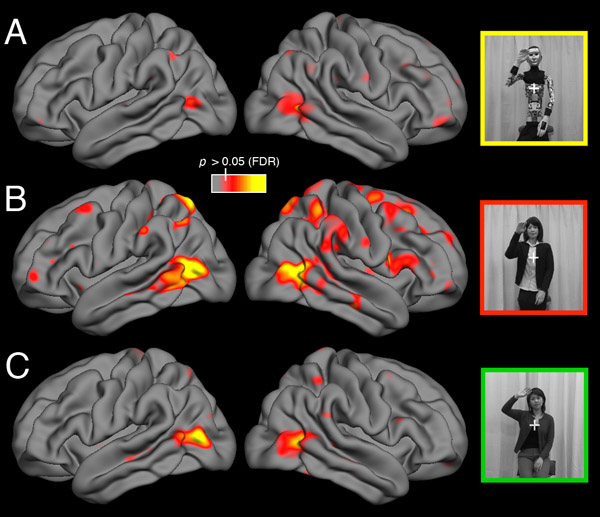

Successfully perceiving and recognizing the actions of others is of utmost importance for the survival of many species. For humans, action perception is considered to support important higher order social skills, such as communication, intention understanding and empathy, some of which may be uniquely human. Over the last two decades, neurophysiological and neuroimaging studies in primates have identified a network of brain regions in occipito-temporal, parietal and premotor cortex that are associated with perception of actions, also known as the Action Observation Network. Despite growing body of literature, the functional properties and connectivity patterns of this network remain largely unknown.

The goal of this dissertation is to address these general questions about functional properties and connectivity patterns with a specific focus on whether this system shows specificity for biological agents. To this end, we collaborated with a robotics lab, and manipulated the humanlikeness of agents that perform recognizable actions by varying visual appearance and movement kinematics. We then used a range of measurement modalities including cortical EEG oscillations, event-related brain potentials (ERPs), and fMRI together with a range of analytical techniques including pattern classification, representational similarity analysis (RSA), and dynamical causal modeling (DCM) to study the functional properties, temporal dynamics, and connectivity patterns of the Action Observation Network.

While our findings shed light on whether the human brain shows specificity for biological agents, the interdisciplinary work with robotics also allowed us to address questions regarding human factors in artificial agent design in social robotics and human-robot interaction such as uncanny valley, which is concerned with what kind of robots we should design so that humans can easily accept them as social partners.

Please join us and find out more about some of Burcu’s exciting and interdisciplinary work in the lab!