I woke up this morning to see a link (by James C. Coyne or @CoyneoftheRealm on twitter) to Russ Poldrack’s blog post about bad reporting of fMRI results on his blog. While I get what Poldrack is saying, and have agreed with him and others in the field about the basic problem with this kind of inference using fMRI, what I want to talk about is the fact that the post was shared with the opinion that “fMRI is not replicable and is pseudoscience”.

This is a serious insult that should not be thrown around lightly.

FMRI is one of the methods I use in my work. I do so because I believe it can answer some of the biological questions that interest me. I also believe know it is not just random bits of noise us evil scientists sell to journals, taxpayers and newspapers for fame and glory. (Speaking of which, where is all the fame and glory I’ve been promised?)

When I called Coyne on the assertion that fMRI was pseudoscience, he pointed to this paper as his “proof”. Many of you know this as the famous voodoo correlations paper that caused quite a bit of kerfuffle a few years ago. It’s both puzzling and unfortunate that Coyne reasoned that a paper that makes a specific statistical point (inflated effect sizes of non-independently selected samples) that affects some neuroimaging studies, and is by no means an issue limited to neuroimaging, means neuroimagers are pseudoscientists, but it is indicative of a problem I want to point out.

Nearly 4 years on, the voodoo paper is being thrown around by people outside of our field attesting all fMRI work is pseudoscience. It’s not just outsiders either. Many a paper that has nothing to do with inflated effect sizes has been rejected with reference to this work – yes editors should call reviewers out on this, but often times they don’t (and in this field one negative reviewer is enough to kill your paper). The voodoo paper made some important points and perhaps some of the papers that did make errors in their effect size calculations deserved to be called on their mistakes, but this makes little practical difference to the validity of the vast majority of fMRI findings. More than anything, this whole thing shows that people are very quick to jump in and hate on fMRI, pulling together as their evidence bits of arguments that are at best loosely connected, or appealing to statistical minutae that don’t affect the vast majority of research findings after all.

Another favorite is that salmon “study” everyone thought was brilliant, which can also be summed up as “it would be really silly if people ran a ton of statistics and published the false positives”. Indeed, which is why no one ever runs one run of an fMRI study with one subject and publish without any correction. But don’t spoil the fun! They put a dead salmon in the scanner doing social cognition. I want to find it funny, but it’s really tiresome to have that damn salmon brought up all the time, as if the entire field was in the dark about multiple comparisons until the hero fishermen came along. They should have scanned a herring. A red herring.

If anything, publishing an fMRI study today takes more statistical sophistication and care than many other areas of science. I don’t see people attacking neurophysiology as pseudoscience (and it isn’t). Yet, how many times have I read results like “n neurons of m recorded” with nary a chi squared? Routine statistical methods in electrophysiology research, especially event related potential (ERP) analyses, suffer from the double-dipping issue highlighted by the voodoo papers and others. However, this is not considered to be a deal-breaker like double dipping in fMRI: choosing which ERP components to do statistical tests by “visual inspection” is standard practice.

In an ideal world, scientists would exercise critical thinking before throwing around insults about others’ work. If there’s any group of people who should resist loose reasoning and cult mentality, it should be scientists.

When the issue of fMRI-hating comes up, some colleagues say we must bring bad fMRI under scrutiny so that the public knows better and the science can be improved. Exactly. I am a passionate believer in public engagement. But I believe there are many areas where there is actual pseudoscience that gets a lot of press that has larger consequences. While a minority of neuroimagers may indeed have overhyped their results (probably because they’re trying to sell them to a Glamour magazines that insist your work be hott!), most are the fully aware of the limitations of their work. Second, peer review usually takes care of a lot of issues. Perhaps in the 90s when fMRI was shiny and new, publication standards were lower, but today it is not at all easy to publish fMRI data. Peer review of course isn’t perfect and bad stuff does get out but this is not specific to neuroimaging. And third, is it really hard to understand why “well what we’re recording is an indirect measure of brain activity that we model in this way and find that it correlates with …”, often turns into “scientists have found brain area that does X” in a newspaper article? As Poldrack mentions in his post, the NYT published a widely-criticized article a few years ago, inciting critiques from leaders in the field of cognitive neuroscience. Now NYT went and published a new article with the same exact error in reasoning. Calling other scientists names has hardly solved the problem.

Don’t get me wrong, it would be great if we could teach the public to be more critical in their reasoning. Informing people on how to interpret those brains with blobs they occasionally see would be nice and I try to do so in my courses and in the lab. But is it as important as helping people be more critical of the link between autism and vaccines, or astrology and destiny, or magnets and pain relief, or gender/race and intelligence, or to be bothered to think through and understand the evidence supporting evolution?

All this to say, I do not think all of this fMRI-hate is happening primarily in the interest of the public or good science. Discussions about methods/statistics can and should be held. But these should be a civil and fact-based discussions among scientists (and perhaps the media). There is no need for sweeping statements and name-calling. Lets be fair and apply some critical thinking to the issues at hand first before we jump and say fMRI (or genetics, or whatever) has been discredited (another word I keep hearing about fMRI). It’s anyone’s prerogative to take to a blog and write (as I am doing now). Fighting bad science and actual pseudoscience is important and should be done responsibly. Lets not pretend calling people names without really questioning the content or logic of the arguments is some selfless, holy work being done in the name of science and the taxpayers’ enlightenment.

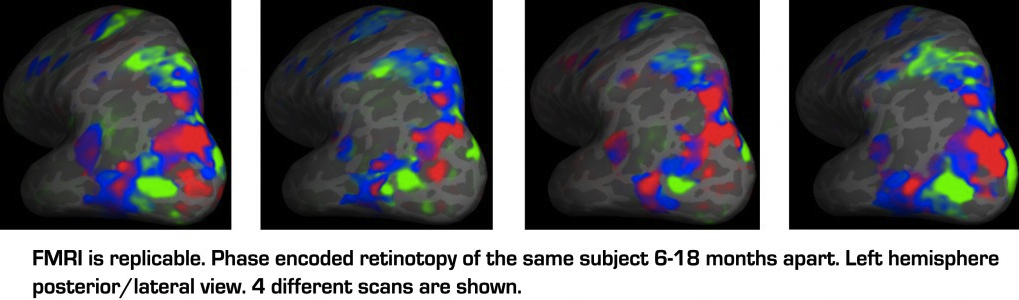

Now, of course I got defensive about the pseudoscience allegation, but lets also address the assertion that fMRI is not replicable. This is not correct. There is good work on replicability of fMRI, though it may not be as fun and easy to read as the salmon study, I recommend looking into this. Many people replicate effects over different studies (I like to replicate and extend with other complementary methods such as neuropsychological patient work and TMS). But please allow me add something qualitative: Below we have fMRI data from the same subject scanned in the span of 5 years 4 different times in slight variants of the same paradigm. If you don’t know what these data are showing, please don’t worry about what the experiment is for a second, but just look at it with your human eyes and human brain: Do you really believe those colorful swathes look like random false positives? The same patterns emerging year after year? Can that be just noise?

Perhaps the pleasure people get from seeing fMRI lose its glamour is like finding out at the high school reunion that the prom queen is no longer a perfect size 6. But the prom queen’s jeans size does not make you any different. It may be tempting to hate on the popular/glamorous, but it’s also not right to do so just because it is popular/glamorous. Scientists should do better than that.

I want to point out, despite all my passion and defense here, fMRI is only one of the tools I use in my research. I am in fact very critical about a lot of fMRI research. I use it sparingly and I try to be conservative in interpretation. So I am hardly the best person to defend fMRI in this context. My irritation here is that there are irrational and unsubstantiated attacks being made. fMRI is a pretty darn amazing feat of research and technology that allowed us to noninvasively image human brain function at high spatial resolution. It also has many inherent limitations. The biggest problems I encounter in fMRI have little to do with statistics or false positives (which are easily addressed by replications). It is human error in experimental design and/or interpretation of the data. This applies to any area of science, and fMRI is not immune.

I hope this misdirected fMRI hate soon gets culled with actual critical thinking and scientists. Public understanding of science is poor in many countries and federal funding has been declining. The brain is complicated enough without creating factions and cliques and name-calling. There is room for all of us as we try to tackle difficult scientific problems. If you don’t like fMRI, if it’s not the right method for your research, don’t use it. But researchers in this field are by and large honest individuals who are more than open to using/developing the best statistical practices and experimental approaches to address their research goals.

There is no grand fMRI scam and neuroimagers do not deserve to be called pseudoscientists.

ETA: Here’s more about the NYT piece that sparked the hate. Bad reporting and sloppy reasoning hurts all of us.

Thanks Ayse, I hadn’t realized that my post was being used in this way. As someone who uses fMRI as my primary research tool, and has published work previously showing the test-retest reliability of fMRI data, I couldn’t disagree more with those who want to use this episode to paint all of fMRI as useless.

Thank you! And had I composed this message in a more organized fashion as opposed to just airing my frustration re: pseudoscience, I would have included your work as an example of just what I am talking about. So thanks for the links!

On sensationalist pseudoscientists and repeated failures to replicate-

There seems to be a fair amount of junk in every field I read, cognitive MRI is no exception. Misrepresenting/misreporting findings does the rest of us real injustice. As I come to understand more neuroscience, I am increasingly chagrined by the poor/inadequate/lazy reviewers that let the real garbage through. It is one thing for a dumb researcher to conduct a dumb study. It is quite another thing for reviewers and editors to endorse and propagate the crap that lands on their desks. Who knows what huge amount of resources go to waste following up on findings that never really existed?

Bah!

thanks for the thoughtful and impassioned response to my Facebook sharing of a link. Too bad that more fMRI researchers do not share your sentiment. And my discontent does not just apply to fMRI researchers, has seen by my regular rants about other areas of research, including my own, psychological aspects of adaptation to cancer.

Best

Jim Coyne: “Too bad that more fMRI researchers do not share your sentiment.”

I would argue that most do. I think Ayse’s prom queen analogy is very fitting here.

Plus, you know, there was just this cool twitter exchange on the issues of f-MRI. I link in Matt Wall’s blog post, which links to the storify, which links to a number of other posts discussing issues with f-MRI which are highly thoughtful.

http://computingforpsychologists.wordpress.com/2012/05/28/the-immediate-and-wide-ranging-power-of-twitter-discussion-with-vaughn-bell-and-others-on-fmri/#comment-400

This goes on. Though it isn’t sexy and eyecatching (the way of the salmon).

(Oh, what the hell, I had the storify page open, so I’ll link that too. http://storify.com/criener/fmri-discussion?awesm=sfy.co_10d9&utm_campaign=&utm_medium=sfy.co-twitter&utm_source=t.co&utm_content=storify-pingback)

Thanks for the links. As puzzling as it is for me to see the salmon rearing its red herring of a head yet again (and on such a usually rational blog), it’s good to see colleagues discussing the issues..